Renan Krishna

InterDigital Europe

Morteza Kheirkhah

InterDigital Europe

Chathura Sarathchandra

InterDigital Europe

Alain Mourad

InterDigital Europe

The term Extended Reality (XR) includes Augmented Reality (AR), Virtual Reality (VR) and Mixed Reality (MR) applications. In this article, we explore the requirements that XR applications place on networks.

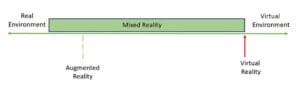

AR augments the physical world of the user by enabling interaction with the virtual world. On the other hand, VR places the user inside a virtual environment generated by a computer. MR merges the real and virtual world along a continuum that connects a completely real environment at one end to a completely virtual environment at the other end. In this continuum (see figure) all combinations of the real and virtual worlds are captured.

To run applications with XR characteristics on mobile devices, computationally intensive tasks need to be carried out in near real-time, hence corresponding computational resources are needed on the device or on an edge server in proximity. Edge computing is an emerging paradigm where computing resources and storage are made available in close proximity at the edge of the Internet to mobile devices and sensors.

In this article, we discuss the issues involved when edge computing resources are offered by network operators to operationalize the requirements of XR applications running on devices with various form factors including Head Mounted Displays (HMD) and smart phones. These devices have limited battery capacity and dissipate heat when running. Additionally, the wireless latency and bandwidth available to the devices fluctuate and the communication link itself might fail.

The real-virtual continuum

The XR technology landscape

The term XR includes AR, VR and MR. The domains in which XR technologies can be used include entertainment, education, industrial manufacturing, marketing, etc.

In AR, the application provides the user with information that is artificially generated and then overlaid onto the physical environment. This information can be textual, audio or visual such that in the user’s perception, it becomes part of the real world.

VR involves “inducing targeted behaviour in an organism by using artificial sensory stimulation, while the organism has little or no awareness of the interference”.

MR involves the “merging of real and virtual environments somewhere along the ‘virtual continuum’ which connects completely real environments to completely virtual ones”.

The devices used to run XR applications can focus on visual, audio, or even haptic (touch-based) modalities. These devices can be placed on the head, on the body, handheld or anywhere in the physical environment.

Visual modality for XR applications can be achieved through optical see-through, video see-through or spatial projection-based approaches.

Audio modality for XR applications is typically achieved through speakers on the headsets or through headphones. More advanced techniques to generate audio involve making a moving user perceive that the sound is emanating from the user’s 3D location. This involves head tracking, spatial sound synthesis and head-related transfer function.

Haptic modality can be achieved through instrumenting physical environments (example: Disney Research’s AIRREAL). Alternatively, user perceptions can be augmented by on-body devices such as wearable vests, gloves, shoes, and exoskeletons.

Requirements for communication networks

The goal when delivering both streaming and interactive XR data is to provide an appropriate Quality of Experience (QoE) to the user. In the following section we focus on the application being able to provide the illusion of being present in a stable spatial space as this QoE dimension impacts the latency and bandwidth requirements for the network. This illusion of presence requires the network to provide data rates and latencies less than certain threshold values as discussed below. The illusion of presence is provided by visual, auditory and haptic inputs.

For providing visual inputs to create an illusion of presence, the display on the AR/VR device should synchronize the visual input with the way the users are moving their head. This is necessary to avoid motion sickness that results from a time-lag between when the users moving their head and when the appropriate video scene is rendered. This time lag is often called “motion-to-photon” delay. Studies have shown that this delay can be at most 20ms and preferably between 7-15ms to avoid the motion sickness problem. In addition, the data rate required could be in the order of 30 Mbps or higher for XR applications providing visual inputs to support six degrees of freedom that allow users moving in the virtual space.

For providing auditory inputs to create an illusion of presence, latencies should be below 60ms (70% probability) for VR-based isolated auditory stimuli and below 38ms (70% probability) for AR-based reference tone stimuli. Depending on the configuration of the audio system, audio bit rates of 600 kbps can be expected.

For providing haptic inputs to create an illusion of presence, latencies of up to 1ms are acceptable with a data rate of several kilobits.

Edge computing to the rescue

Edge computing represents the design consideration of network proximity. This requires that mini data centers with a small number of servers are provided closer to the location of UEs. This proximity is quantified in terms of low round-trip-times and the availability of high-bandwidth connectivity between UE and an edge computing server. The edge servers use cloud technologies that enable them to support offloaded XR applications.

In particular, the edge servers deploy cloud computing implementation techniques such as disaggregation (breaking vertically integrated systems into independent components with open interfaces using SDN’s data and control plane separation), virtualization (being able to run multiple independent copies of those components such as SDN Controller apps and Virtual Network Functions on a common hardware platform) and commoditization (being able to elastically scale those virtual components across commodity hardware as the workload dictates). Such techniques enable XR applications requiring low-latency and high bandwidth to offload computationally intensive tasks (that also generate heat and consume battery power) to the mini-clouds running on proximate edge servers.

New transport protocols and congestion control mechanisms in conjunction with delivery from the edge are also being investigated to support XR applications [1]. The increased volume of XR data can be delivered using multipath content delivery by Multipath Transmission Control Protocol (MPTCP). Prediction of the user’s movement and network conditions using Artificial Intelligence (AI) and Machine Learning (ML) techniques can also be used.

3GPP standardization activities

A release 18 study of the SA (service and systems aspects) working group 1 within the 3rd Generation Partnership Project (3GPP) on supporting tactile and multi-modal communication services [2] identifies use cases that include immersive multi-modal VR, Immersive VR games and virtual factory.

A 3GPP SA working group 2 study on architecture enhancements for XR has recently been approved, which takes requirements specified in SA 1 study on supporting tactile and multi-modal communication services [2].

IETF standardization activities

The media operations (MOPS) working group (WG) of the IETF has finalized a draft [3] that provides an overview of operational networking issues that pertain to quality of experience when streaming video and other high-bitrate media over the Internet. Another draft [4] at the MOPS WG explores the issues involved in the use of edge computing resources to operationalize media use cases that involve XR applications.

The Audio/Video Transport Core Maintenance (AVTCORE) WG is standardising new RTP payload formats for VVC and VP9 codecs and might be expected to develop RTP payloads for MPEG Immersive Coding Standard (MIV) / Video Based Point Cloud Compression (V-PCC).

The Deterministic Networking (DetNet) WG focuses on deterministic data paths that operate over Layer 2 bridged and Layer 3 routed segments, where such paths can provide bounds on latency, loss, and packet delay variation (jitter), and high reliability. The WG has produced XR-related use cases.

The Reliable and Available Wireless (RAW) WG extends the DetNet WG concepts to provide for high reliability and availability for an IP network utilizing scheduled wireless segments and other media. This WG has also produced XR related use cases.

Conclusion

XR applications running on mobile devices with different form factors encompass AR, VR and MR applications. These applications have stringent low-latency and high-bandwidth requirements. Edge computing enhanced with new transport protocols, congestion control, multi-path delivery, AI/ML prediction of a user’s movement and network conditions is emerging as a solution to mitigate the challenges of these stringent requirements.

References

[1] M. Kheirkhah, M. M. Kassem, G. Fairhurst, M. K. Marina, “XRC: An Explicit Rate Control for Future Cellular Networks”, ICC 2022 – IEEE International Conference on Communications, 2022, pp. 1-7.

[2] 3GPP TR 22.847

[3] https://datatracker.ietf.org/doc/draft-ietf-mops-streaming-opcons/

[4] https://datatracker.ietf.org/doc/draft-ietf-mops-ar-use-case/